Kubernetes (also known as “K8s”) is an open-source container orchestration system for automating the deployment, scaling, and management of containerized applications. It was originally developed by Google and is now maintained by the Cloud Native Computing Foundation (CNCF). Kubernetes has quickly become the de facto standard for container orchestration and is used by companies such as Uber, Dropbox, and Salesforce to manage their production workloads. In this article, we’ll provide an introduction to Kubernetes, including its key features and how it works.

Kubernetes provides a platform-agnostic way to deploy and manage containerized applications in a clustered environment. It automates the distribution and scheduling of containers across a cluster of nodes and provides many features to help ensure the availability and resilience of your applications.

How Does Kubernetes Work?

At a high level, Kubernetes works by abstracting the underlying infrastructure and providing a uniform way to deploy and manage containerized applications. This is achieved through a combination of APIs, command-line tools, and declarative configuration files.

The basic unit of deployment in Kubernetes is the container, which is a lightweight, standalone, and executable package that includes everything needed to run an application (e.g. code, libraries, dependencies). Containers are isolated from one another and from the host operating system, making them easy to deploy and manage.

In Kubernetes, containers are organized into logical units called pods. A pod is a group of one or more containers that are deployed together on the same node and share the same network namespace. Pods are the smallest deployable units in Kubernetes and are used to host applications.

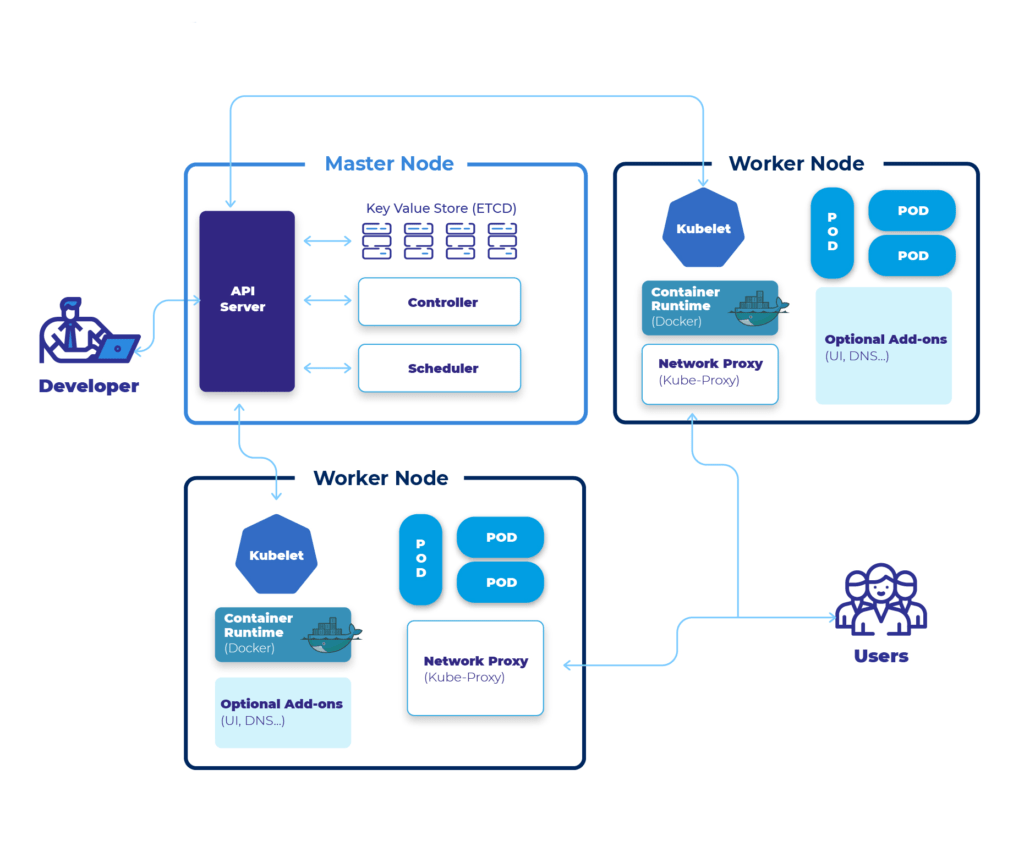

Kubernetes clusters are made up of a number of nodes, which are physical or virtual machines that run the Kubernetes runtime and host pods. Nodes are managed by the Kubernetes control plane, which is responsible for scheduling pods, ensuring that they are running correctly, and handling other tasks such as scaling and self-healing.

In addition to pods and nodes, Kubernetes also includes a number of other components, such as services, which provide a stable network endpoint for a group of pods, and persistent volumes, which allow applications to store data even if the pods they are running on are terminated.

Some of the features provided by Kubernetes include:

- Deployment and scaling of containerized applications

- Load balancing and self-healing of applications

- Configuration management and secret management

- Resource allocation and quotas

- Service discovery and load balancing

Kubernetes is often used in conjunction with containerization technologies such as Docker and is becoming increasingly popular as a way to deploy and manage microservices-based applications.

Components of Kubernetes

Kubernetes is made up of a number of components that work together to provide a platform for deploying and managing containerized applications. Here are some of the main components of Kubernetes:

- etcd: A distributed key-value store that stores the configuration data for the cluster.

- kube-apiserver: The API server is the front-end for the Kubernetes control plane and is responsible for exposing the Kubernetes API.

- kube-scheduler: The scheduler is responsible for scheduling pods (groups of containers) onto nodes in the cluster.

- kube-controller-manager: The controller manager is responsible for running controllers, which are processes that regulate the state of the cluster.

- kubelet: A daemon that runs on each node and is responsible for managing the pods on that node.

- kube-proxy: A network proxy that runs on each node and is responsible for implementing the service abstraction.

These are some of the core components of the Kubernetes control plane, which is responsible for managing the cluster. In addition to these components, there are also various other tools and components that are commonly used in conjunction with Kubernetes, such as container runtime engines (e.g., Docker), monitoring tools, and configuration management tools.

Benefit of Kubernetes

Kubernetes offers many benefits for deploying and managing containerized applications:

- Scalability: Kubernetes makes it easy to scale your applications up or down by increasing or decreasing the number of replicas of a given application.

- High availability: Kubernetes can help ensure that your applications are always available by automatically restarting failed containers and distributing replicas across multiple nodes.

- Automated rollouts and rollbacks: Kubernetes can automatically roll out new versions of your applications and roll back to a previous version if something goes wrong.

- Resource management: Kubernetes allows you to specify resource limits and quotas for your applications, which helps ensure that one application does not consume too many resources at the expense of others.

- Centralized configuration management: Kubernetes provides a central place to store and manage the configuration of your applications, which can make it easier to deploy and manage applications at scale.

- Service discovery and load balancing: Kubernetes can automatically discover and load balance services within the cluster, which can make it easier to build and deploy microservices-based applications.

- Portability: Kubernetes is platform-agnostic, which means you can use it to deploy your applications on a variety of different infrastructure platforms, including on-premises, in the cloud, or in a hybrid environment.

How to Use Kubernetes

Using Kubernetes typically involves creating a cluster of nodes (either physical machines or virtual machines) and deploying containerized applications to that cluster. The Kubernetes control plane is responsible for managing the cluster and scheduling the deployment of containers onto the nodes.

Here are some general steps for using Kubernetes:

- Install and configure a Kubernetes cluster. This involves setting up one or more machines as nodes in the cluster, and installing and configuring the Kubernetes control plane components (e.g., etcd, kube-apiserver, kube-scheduler, kube-controller-manager) on those nodes.

- Package your application into a container image using a tool like Docker.

- Create a Kubernetes Deployment to manage the replica set of your application. A Deployment is a higher-level concept that represents a desired state for your application. It manages the replica set and ensures that the correct number of replicas are running at all times.

- Expose your application to the internet using a Kubernetes Service. A Service is a load balancer that exposes your application to external traffic.

- Use Kubernetes features such as ConfigMaps, Secrets, and Persistent Volumes to manage the configuration and state of your application.

Conclusion

Kubernetes is a powerful and widely-used container orchestration platform that makes it easy to deploy, scale, and manage containerized applications. Its portability, reliability, and simplicity make it a great choice for organizations of all sizes. If you’re new to Kubernetes, we hope this introduction has provided a helpful overview of what it is and how it works. To learn more about Kubernetes and how to get started with it, we recommend checking out the official Kubernetes documentation and exploring the many tutorials and resources available online.

There are many more advanced features and concepts in Kubernetes, such as rolling updates, autoscaling, and monitoring, but these are some of the basic steps for getting started with Kubernetes.